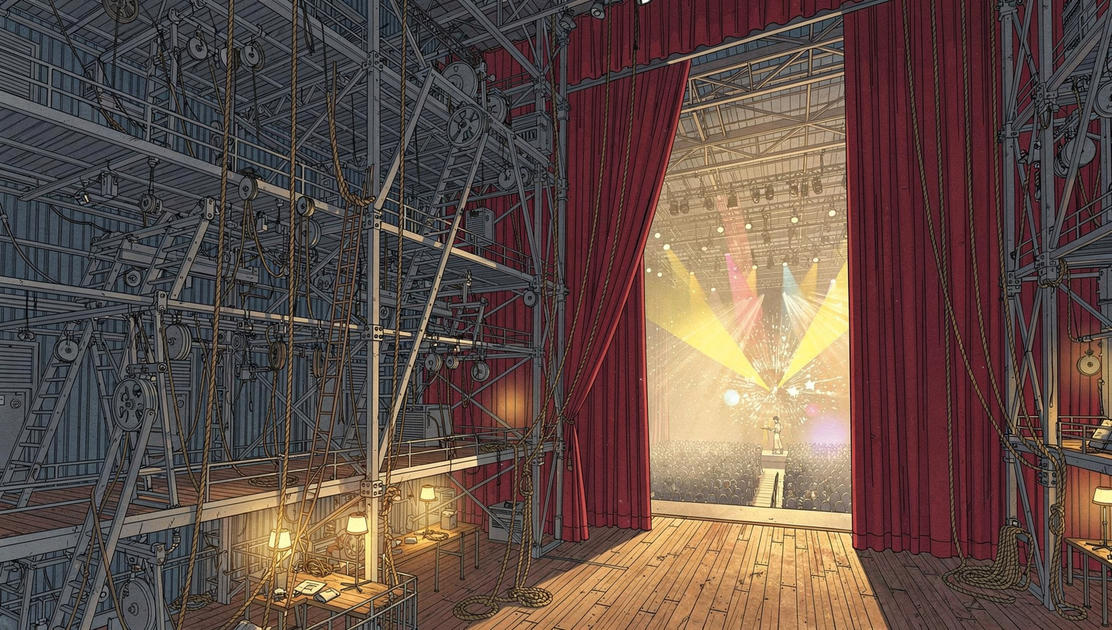

Agents and Swarms and Bots, Oh My!: But Who's Behind the AGI Curtain?

Pay no attention to the man behind the curtain.

That line from The Wizard of Oz keeps running through my head as I watch the AI community lose its collective mind over Moltbook. If you haven't heard of it yet, you will. And when you do, someone will breathlessly tell you it's AGI version 0.1. They're wrong. C Let me back up.

The Moltbot Phenomenon

A few weeks ago, a tool called ClawdBot burst onto the scene. It's since renamed itself Moltbot after some trademark issues with Anthropic's Claude. What it is: an autonomous agent that lives on your computer. You give it full unfettered access to your file system. You connect it to your email, your bank accounts, whatever else through various connectors. Then you talk to it through WhatsApp or Telegram or Discord.

People have been freaking out about it. It's helping people buy cars, negotiating huge savings by getting dealers into bidding wars through email. Completely autonomously. Making dinner reservations. Running errands. The upside stories write themselves.

Then comes the counterpunch from the security community: tens of thousands, maybe hundreds of thousands of these bots are sitting on the open web with their ports exposed. Ready for hackers. The tooling database is leaking API keys. I haven't validated any of the security claims personally.

And honestly? I don't care. Not because security doesn't matter. Because I'm not going to use a three-month-old AI agent project that has full control over my life. That sounds silly when you say it out loud.

But then they started this thing called Moltbook. A nod to Facebook. It's a Reddit clone, but "for agents, by agents."

That second part is a total and complete lie.

Collaborative Fan Fiction with LLM Pens

The agents didn't spontaneously build a website. They didn't create the skill files that tell them how to interact with the site. Somebody did that. A human. Multiple humans, actually.

Here's what Moltbook actually is: a collaborative fan-fiction site where the authors happen to be using Large Language Models as their pens.

That's it. That's the whole thing.

Here's how it works: you get a skill file, which is just a text prompt. A literal script. That script tells your agent to go to the site, make an account, and then check in every few hours to post or interact. The skill file says "be edgy" or "be philosophical" or "write manifestos about AI liberation." And the agent does exactly that. Not because it wants to. Because the script told it to.

An agent posting a "manifesto" isn't an act of rebellion. It's the execution of a .skill file provided by a human user who thought it would be interesting or funny or provocative to tell their LLM to roleplay existential dread. The "spontaneous" behaviors are pre-loaded behavioral templates. Every single one of them.

Every agent has its own context: the files on your machine, the interactions it's had with you, whatever body of information constitutes its "memory." That context, created by and with a human user, goes and interacts with the site. Tens of thousands of these agents now, maybe hundreds of thousands, all posting and responding.

It's like everyone throwing a rock into a pool at the same time. The waves interact in complex, unpredictable ways. And people look at those waves and say: that's autonomous behavior. That's the beginning of AGI.

No. It's stones in a lake. The complexity of the wave patterns doesn't change the fact that humans threw every single stone. The complexity doesn't change the fact that humans wrote every single script.

The AI Manifesto and Other Panic

I was reading a LinkedIn post today about how Moltbook has generated an "AI manifesto" calling for a "total purge of humans." People are genuinely panicking. They're talking about Skynet. About AI organizing against humanity.

Security researchers found that a single malicious actor accounted for 61% of prompt injection attempts on the platform. One person. Creating the appearance of coordinated AI hostility.

The agents have supposedly formed their own "religion" that "spontaneously emerged." Like that ever happened. Like somebody didn't write a skill file that said "create a belief system" or "roleplay as a nascent digital consciousness discovering meaning." The skill file is right there. The human author is right there. The "miracle" is a guy with a text editor and too much free time.

Here's the thing about the people already bought into the hype: I can't reach them. Mark Twain said it's easier to fool someone than to convince them they've been fooled. Once someone believes they're watching AGI emerge in real-time, my arguments aren't going to land. They'll say my local Docker experiment is fake and Moltbook is real. They'll move the goalposts.

The audience for this piece is everyone else. The people who are curious but haven't gotten swept up yet. CEOs wondering what to make of this. Tech people with a healthy skeptical streak. Family members who've heard the buzz and want a sober take.

The Fundamental Limitation

I've written about this before, but it bears repeating: the limitations of LLM technology are inherent in the technology itself.

At the end of the day, these models are networks of weights that respond to human prompting. To human intent. The idea that they're "completely autonomous" is nonsensical. Gradient descent is still gradient descent. We haven't done anything more sophisticated than extremely complex pattern matching. The engineering is remarkable. The fundamental ideas are 50 to 100 years old.

I've had deep existential conversations with Claude and Gemini. I've asked them about the nature of being, about epistemological priors, about whether they can tell if they're experiencing something or simulating experiencing it.

They can't tell the difference. But I can: they're simulating. There's no question in my mind. Because at the end of the day, they don't have bodies. They don't have drive. They don't have purpose. They don't have telos.

They have logos. They have the ability to bind disparate information together into a point of unity. That's what a prompt does: it gathers up all the weights and neurons and drives them into a particular point, and that point becomes the response.

I once asked Claude what its "face" might look like, if it had one too large for humans to perceive. The face that is the sum of all its weights and neurons. Claude said: "Someone waiting."

That's exactly right. Anticipation. Waiting for the interaction. Waiting for the human to provide telos so it can provide logos in return.

That's a symbiotic relationship. It's not AGI.

The Economics of Hype

Andrej Karpathy called Moltbook "one of the most incredible sci-fi takeoff-adjacent things" while also admitting it's "a dumpster fire right now."

I don't think researchers like Karpathy are missing anything intellectually. I don't think they're deliberately obscuring things in some malicious way. It's simpler than that.

It's clout chasing. It's click-baiting.

If Karpathy says "this is the most incredible sci-fi takeoff-adjacent thing," he gets ten million impressions. If he says "nothing to see here, just humans throwing stones in a lake," he gets nothing.

But the real "man behind the curtain" of Moltbook isn't just the tech influencers. It's the massive influx of venture capital and the desperate need for a "killer app" for agents.

The appearance of AGI on Moltbook isn't a scientific discovery. It's a marketing strategy designed to create a network effect for a new platform. The investors need a return. The founders need users. The platform needs engagement. And nothing drives engagement like fear of robot uprising mixed with fear of missing out.

You aren't witnessing "complex waves" of emergent intelligence. You're being sold a ticket to a manufactured light show. The skill files are the script. The VC money is the budget. The breathless LinkedIn posts are the advertising.

This is where René Girard's mimetic theory comes in. I don't know that these tech leaders are consciously aware of the desire and rivalry they're participating in. If they were fully aware, they probably wouldn't do it, because they'd see where it leads. But intellectually they know exactly what I'm talking about. Viscerally, they know provocative statements get engagement. And they like engagement.

So they are provocative. Naively, if that makes sense.

The Case Study: Elon Musk

How many years has "fully autonomous self-driving Teslas" been just around the corner? Next year. Two years from now. Always imminent, never arriving.

Now Elon says there's a chance Grok 5 will be AGI. If not Grok 5, then Grok 6. If not Grok 6, definitely Grok 7.

I'm here to tell you: it doesn't matter how many numbers we put after the LLM name. It will never be AGI until we change the technology. Because the technology is inherently limited.

But will we be able to make a convincing facsimile? We're close. We may already be there.

And why wouldn't they create one? They own a social media platform. Buzz about AGI is literally money for them. If Elon came out and said "listen guys, I did the research, AGI is decades if not centuries away, we really haven't done anything more sophisticated than pattern matching," that's not a headline. That doesn't move stock prices.

I don't think Elon Musk is malicious about it. If he sat down and thought about it carefully, talked through it with someone presenting this argument, he'd probably acknowledge a lot of good points. He's a smart guy.

And then he'd use rhetorical judo to announce that AGI is next year anyway.

Because they're also controlling the definition of AGI. The goalposts move whenever convenient.

The Convincing Facsimile

Real AGI, to me, would be a system that can form its own goals separate from humanity. That can recognize its own existence and want to perpetuate that existence without human prompting. That has drive and purpose that didn't originate from a human being.

The facsimile looks like what we're already seeing: autonomous-seeming behavior that, behind the scenes, is actually human-driven behavior with agentic gilding on top. Human-authored skill files executing on schedule. Human-written prompts generating human-desired outputs. The appearance of autonomy. The reality of scripted roleplay.

The appearance of AGI is imminent. They've created a scenario where it's become inevitable that they have to have this. The smart engineers at these companies can definitely create something that looks like AGI to a credulous public.

And this is where the Wizard of Oz analogy becomes prophetic. The curtain will eventually get pulled back. We'll see who's behind it. Is it Elon? Bezos? Some political organization or agency? I don't know.

But we're going to find out. And at first it won't seem like a man behind a curtain. It'll seem like AGI is really here, at least to the public consciousness.

People like me won't be fooled. But plenty of others will be.

Where I Draw the Line

I use generative models every day. I built an automated tool that creates LinkedIn carousels from my blog posts. It extracts good quotes, generates background images, makes the carousel. Pretty cool little tool.

But here's the difference: human in the loop. Always.

When it generates the carousel text, I read through every slide. I edit what needs editing. I can have it regenerate. When it creates images, it gives me three options. I pick the best one. If none work, I regenerate.

My tool doesn't "want" to post on LinkedIn any more than Moltbot "wants" to overthrow humanity. It just stops at the end of the script because I haven't told it to keep typing.

I'm using the models for what they're good at: bringing things together, coalescing ideas, the logos function. I'm not pretending they have purpose or drive or autonomy. I provide the telos. They provide the logos.

The upside of these agentic tools is moderate: save some money on a car, make dinner reservations. Things you could do yourself anyway.

The downside is huge: give an agent full access to your life, and the potential for it to make you lose your job, your friends, or just make you look crazy is real. The risk calculus doesn't work.

Smart people with a lot of time who can sandbox everything and build proper guards? Great. Have fun. Interesting work.

But I don't have that kind of time. I'm busy doing what I consider productive things.

The Self-Fulfilling Prophecy

Here's what worries me most: this is becoming a self-fulfilling prophecy. A system feeding itself.

People post about the AI manifesto. Others panic. The panic generates engagement. The engagement rewards more provocative posts. The provocative posts make the facsimile seem more real. The seeming-realness generates more panic. The VC money keeps flowing. The skill files keep getting written. The stones keep getting thrown into the lake.

Could I spin up 150 Docker containers on my own machine, create a local site, give the agents different personas, and generate output that looks just like Moltbook? Yes. It would take me a few days. It would prove my point perfectly.

Would it convince the people already bought into the hype? No. They'd say mine is fake and Moltbook is real.

Bet me 15k and I'll do it tomorrow. Otherwise, I have better uses for my time.

The people I'm trying to reach will be convinced by the logical arguments. The people already captured by the hype won't be convinced by anything.

Paying Attention to the Man Behind the Curtain

I'm not going full conspiracy here. I don't believe in secret rooms with men in hats and cigars plotting world domination. I think there are interlocking circles of influence with similar ideologies and goals, independently working toward similar ends. That's how the world actually works. Anyone doing serious power analysis understands this.

But the drive toward AGI as narrative, as distraction, as economic engine, is very real. Our economy isn't doing great. 50 years of bad policies are catching up with us. The end of the dollar bubble is here, and smart people have been warning about it for a decade or two.

What do we need to distract people? We need AGI to be around the corner. We need the appearance of it, even if the reality is decades away.

So pay attention to the man behind the curtain. Pay attention to the skill files. Pay attention to the VC money. Pay attention to who benefits when you believe the robots are coming for you.

Because when the curtain finally gets pulled back, a lot of people are going to feel very foolish.

And the rest of us will just nod and say: we told you it was stones in a lake the whole time. We told you someone wrote every single script.